Generative AI and Misinformation: NTU Singapore Professor’s GCIT Lecture Tackles Media’s New Frontier

【Article by GCIT】

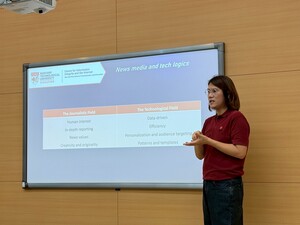

As generative AI technology rapidly advances, the global journalism industry is experiencing an unprecedented transformation. The Master’s Program in Global Communication and Innovation Technology (GCIT) at National Chengchi University (NCCU) hosted a special two-day lecture series from May 19 to 20. The event, curated by GCIT’s Distinguished Professor and Director, Trisha Lin, featured Edson C. Tandoc Jr., Professor of Communication at Nanyang Technological University, Singapore. Professor Tandoc shared his latest research findings and offered an in-depth exploration of the impacts of generative AI and misinformation on the Asian media ecosystem.

During the first day’s workshop, Professor Tandoc presented his pioneering research conducted across the United States and Singapore, examining how university students and news organizations leverage AI tools such as ChatGPT, DeepSeek, and Copilot. The study found that students primarily view AI as an efficiency-enhancing tool for information retrieval, translation, and text refinement, whereas news organizations focus more on AI’s ability to boost productivity and speed. An intriguing on-site experiment challenged participants to distinguish between AI-generated and human-written news content, sparking robust discussions about journalistic quality standards and the authenticity of information.

Drawing on field research with Singaporean journalists, Professor Tandoc noted that newsroom management teams have shown higher acceptance of AI technology than frontline reporters, and that AI adoption in newsrooms is steadily increasing. He warned, however, that despite the proliferation of AI tools, this “tool diversification” does not equate to “content diversity.” Instead, it risks homogenizing journalistic language and logic. His research also tracked the evolution of media narratives since the release of ChatGPT, revealing a shift from early enthusiasm about “technological potential” to growing debates around regulatory responsibility—reflecting society’s deepening concerns over AI ethics and transparency.

Culturally-Embedded Misinformation in Asia

On the second day, the public lecture focused on Professor Tandoc’s core research area: the misinformation phenomenon. He introduced a four-element analytical framework—information sources, content, distribution channels, and audience reception—highlighting how misinformation often mimics journalistic formats to exploit social media and instant messaging platforms, further amplified by algorithmic recommendations and social identity dynamics.

Professor Tandoc emphasized the culturally embedded nature of misinformation in Asia. Although sources of misinformation may be similar across regions, the content is often adapted to resonate with local languages, religious beliefs, and cultural contexts. For example, misinformation in China often revolves around pseudo-scientific health claims, while in India it can intertwine vaccine-related concerns with religious taboos, stoking fear within specific communities. Using the COVID-19 pandemic as a case study, Professor Tandoc observed that misinformation primarily focused on disputes over the virus’s origins and vaccine safety, repackaged into seemingly credible local remedies through vernacular language and dialects. His research found that in Malaysia, misinformation is frequently shared via WhatsApp voice messages, while in India, repeated multi-lingual versions of the same falsehoods have significantly boosted cross-community penetration and complicated regulatory efforts.

Deepfake Integration and the Shift to Discursive Manipulation

As misinformation technologies continue to evolve, Professor Tandoc’s research has expanded beyond content verification to include the detection of suspicious account behaviors. He identified patterns such as high-frequency posting, false personal information, rapid community joining, abnormal syntax, repetitive content, and low engagement rates—all of which suggest the use of AI-generated content. Using the 2025 Singapore elections as an example, he highlighted suspicious accounts flooding social media with political content to create an illusion of “public consensus.”

Of particular concern is the integration of deepfake technology with fake accounts. Professor Tandoc explained how malicious actors are now combining real human imagery with AI-generated voice synthesis to produce highly persuasive fake video content, enhancing the realism and impact of misinformation. He stressed that the convergence of fake accounts and AI signifies a shift from “fabrication of content” to “manipulation of discursive power,” posing an unprecedented challenge to journalistic integrity and democratic institutions.

The Irreplaceable Value of Journalism

In closing, Professor Tandoc offered students at GCIT a powerful reminder: in an AI-driven media landscape, future journalists must not only master technical tools but also cultivate critical thinking, ethical judgment, and a deep curiosity about the world. “Your mission is not simply to write better than AI,” he said. “It is to think more deeply.”

The two-day lecture series significantly enhanced students’ abilities to identify and analyze generative AI and misinformation, deepening their understanding of journalism’s digital transformation and laying a solid foundation for future careers in global communication and digital innovation.

[此中英文稿和圖片以及全球傳播與創新科技碩士學位學程均獲得中華民國文化部的補助。]

Fax:886-2-29379611

Fax:886-2-29379611